Design Dropbox | Client | Server | Data Sync

How to design Dropbox. Client and Server components with data sync

Designing a Dropbox-like system involves creating a distributed file storage and synchronization platform that allows users to store, access, and sync their files across various devices and locations. Below are some key responsibilities of the client:

Monitoring the workspace for any changes.

Uploading or downloading file changes to/from the remote server.

Handling conflicts that may arise due to offline usage or concurrent updates.

Updating file metadata on the remote server when changes occur.

Consider a 1 GB file with three consecutive minor changes. In this scenario, the entire 1 GB file is sent to the remote server three times and subsequently downloaded three times by another client. This process consumes a total of 3 GB of upload bandwidth and 3 GB of download bandwidth. Furthermore, the download bandwidth usage increases in proportion to the number of clients monitoring the file.

A total of 6 GB of bandwidth was utilized for just a few minor changes! Additionally, in case of a loss of connectivity, the client must re-upload/download the entire file. This represents a significant waste of bandwidth, prompting us to explore optimization possibilities.

Now, let's consider a different approach where we develop a client that breaks files into smaller 4 MB chunks and uploads them to the remote server. This approach, known as the 'Smart Dropbox Client,' significantly reduces bandwidth usage:

If a file is modified, the client identifies which specific chunk has changed and only uploads that chunk to the remote server. On the receiving end, the other client is notified of the changed chunk and downloads only that portion. This efficient process consumes just 24 MB of bandwidth for synchronizing three minor changes to the file, as opposed to the previous 6 GB.

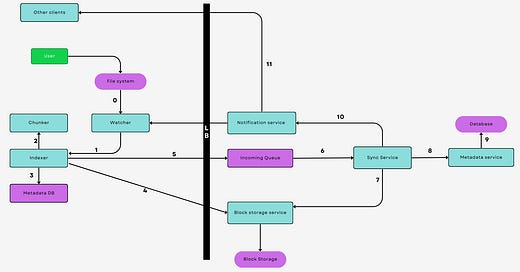

With this optimization in mind, let's delve into the various components of this optimized client:

Client Metadata Database: This database stores information about different files in the workspace, file chunks, chunk versions, and file locations in the file system. It can be implemented using a lightweight database like SQLite.

Chunker: The Chunker divides large files into 4 MB chunks and can also reconstruct the original file from these chunks.

Watcher: The Watcher monitors workspace for file changes such as updates, creations, and deletions, notifying the Indexer of any changes.

Indexer: The Indexer listens for events from the Watcher and updates the Client Metadata Database with information about modified file chunks. It also informs the Synchronizer after committing changes to the database.

Meta Service: The Meta Service is responsible for synchronizing file metadata from clients to the server. It determines the change set for different clients and broadcasts it to them using the Notification Service. The database behind the Meta Service should provide strong ACID properties, making relational databases like MySQL or PostgreSQL suitable choices. An in-memory cache can be employed to optimize performance, using Redis or Memcached.

Block Storage Service: The Block Storage Service interacts with block storage for uploading and downloading file chunks. It notifies the Meta Service when a client finishes downloading a file and updates metadata. Block Storage can be implemented using distributed file systems such as Amazon S3, ensuring high reliability and durability.

Notification Service: The Notification Service broadcasts file changes to connected clients to ensure all watching clients receive immediate updates. It can be implemented using HTTP Long Polling, Websockets, or Server Sent Events. Before sending data to clients, the Notification Service reads messages from a message queue, which can be implemented using Kafka. This decoupling allows both the Meta Service and Notification Service to scale independently without affecting performance."

Here's a more detailed explanation 👇